While “Big Data” may sound like nothing more than a buzzword, many companies are using it for a wide variety of online applications; for instance, to target marketing efforts to customers more effectively. But what is big data, really?

While “Big Data” may sound like nothing more than a buzzword, many companies are using it for a wide variety of online applications; for instance, to target marketing efforts to customers more effectively. But what is big data, really?

In search for future technologies that can enable companies to achieve efficiency and cost effectiveness for business? Well, the 36th Gulf Information Technology Exhibition (Gitex) provided just that.

This 5 day event opened its doors from Sunday 16th October, 2016. It was the largest ICT exhibition in the Middle East, Africa and South Asia, that took place at Dubai world trade centre. The event was inaugurated by Shaikh Hamdan Bin Mohammad Bin Rashid Al Maktoum, Crown Prince of Dubai and Chairman of the Dubai Executive Council.

So, what was the Gitex technology exhibition all about? This event showed live demonstrations of future generation technology solutions from various government and international companies that can transform Middle East businesses. Key industry verticals of marketing, healthcare, finance, intelligent cities, retail, education and energy were discussed by industry leaders.

Over 200 investors and influential tech investment companies from Silicon Valley, Europe, Asia and the Middle East, including SoftBank Group International, 500 Startups, and many other venture partners arrived in Dubai to explore, evaluate and potentially fund start up businesses with talent. These ventures also included globally giant investors like Facebook, Dropbox and Spotify.

Key highlights included a large indoor VR activation powered by Samsung logo, Game-changing tech from 4,000 solution providers including AR, VR, AI, Wearables, Drones and more. GITEX Startup Movement examined the global startup movement featuring 350+ breakthrough startups from 52 countries. Customized networking programmes were also on offer for startups to discover how global contemporaries have been there, done that and created an impact.

In today’s era of technology, there is an ever increasing need for entrepreneurs to harness technologies that can enable companies to achieve efficiency and cost effectiveness. FTI has always believed that a strong culture of innovation, in all areas of the company, is an essential contributor to business success. FTI prioritize R&D and technology over cost, as we firmly believe that these functions are critical to ensure our products represent the best quality within the optical media industry.

In line with new innovation for business and services Dubai’s Roads and Transport Authority (RTA) showcased a smart streetlight system at GITEX 2016. According to Eng Maitha bin Adai, CEO of RTA’s traffic and roads agency, there are three systems showcased at Gitex this year and this combination of systems will help sustain green economy requirements by reducing carbon emissions. These systems include the Central Wireless Road Lighting Control System that sets multiple synchronized timetables for dimming streetlights. “A new feature in the smart lighting system is a tentative WiFi service installed in Dubai Water Canal at Sheikh Zayed Road.

RTA also displayed high-definition pedestrian detection camera systems with a twist. These new generation cameras produce pedestrian statistics in terms of number, sex, and age group.

Dubai Customs‘ Smart Virtual Agent initiative was also showcased at GITEX 2016. This venture represents a smart channel for communication with customers, which allows their queries about customs services, procedures and regulations to be answered more efficiently. Without the need for any physical employee the Smart Virtual Agent is an intelligent tool which operates 24/7. This cost effective measure helps efficiently redirect resources to other duties by reducing support cost. The self-improving platform utilizes Dubai Customs’ database and memory of similar cases to answer customer inquiries.

Many GITEX visitors had the opportunity to try out the Smart Inspection Glasses on display at the Dubai Customs stand. These tech heavy glasses facilitate containers’ field inspection, as customs inspectors can browse the customs declaration and x-ray images of the shipment simultaneously as well as its risk level. Inspectors can submit inspection reports using either the virtual keyboard or voice input feature.

Furthermore Dubai Customs showcased 8 happiness-led initiatives and innovations under the umbrella of Ports, Customs and Free Zone Corporation pavilion. Other innovations included the Authorized Economic Operator, Smart Inspection Lab, Smart Customs Route, Bags Smart Customs Inspection System and the Endangered Species Exhibition.

Five seminal trends have caught the eye at this Gitex event namely; Robotics and artificial intelligence (AI), Biometrics, 3-D Printing, Mixed reality and drones. With artificial intelligence on the rise and the need for huge amounts of data to be stored, the progression and development of cloud storage is on the horizon. Cloud storage is a cloud computing model in which data is stored remotely on servers accessed from the World Wide Web, or “cloud.” Storage servers are built on virtualization techniques all maintained, managed, and backed-up online.

The technology world is increasingly realizing that Optical Digital Media is a safer way to store data compared to cloud technology solutions – this is evidenced by multiple data breaches where personal photographs and other information has been hacked and distributed to the public, and by the fact that Facebook has publicly stated that they have chosen to store their data with Optical Digital Media rather than cloud.

Falcon Technologies International (FTI) leads the way with regard to Optical Digital Media security and memory technology, and is conducting potentially ground-breaking research in this field with academic partners.

Technology has come a long way. We see constant development and growth in terms of computing hardware, software and storage. Although some technologists say, those gains are stalling, perhaps limited in by the physical boundary of raw materials that are used in central processing units.

Recently Microsoft thinks it may have found the solution namely field programmable gate arrays (FPGAs). A FPGA is an integrated circuit designed to be configured by a customer or a designer after manufacturing – thus it is said to be “field-programmable”. To define the behavior of the FPGA, the user provides:

The HDL form is more optimal for large structures as you can specify them numerically rather than having to draw every piece by hand. However, schematic entry can allow for easier visualization of a design. FPGA allows you flexibility in your designs and is a way to change how parts of a system work without introducing a large amount of cost and risk of delays into the design schedule. Many designers have the false impression that building a system with a modern FPGA means you have to deal with millions of logic gates and a massive amounts of connections just to do something useful. But if that were the case, FPGA use wouldn’t be growing: Instead, there would only be about a half dozen FPGA users left. It turns out FPGA designers have done much of the heavy lifting of adding commonly needed components so all that you have to concentrate on is customizing those functions that are specific to your application. Examples of components produced by designers comprise: clock generators, dynamic random access memory (DRAM) controllers and even whole multicore microprocessors.

This type of computer chip that can be reprogrammed for specific tasks after they leave the factory floor, are adding firepower to Microsoft’s network of on-demand computing power.

Using all of the power of Microsoft’s data centers worldwide, the company could translate all 5 million articles on the English language Wikipedia in less than a tenth of a second.

In the past 2 years Microsoft has quietly been installing FPGAs on the new servers; Microsoft added to its global fleet of data centers. They’re present usage includes ranking results in the Bing search engine and speed the performance of Microsoft’s Azure cloud-computing network. Microsoft is alone among major cloud-computing players in widely deploying FPGA technology.

There are also implications for high performance computing and data storage such as solutions for Network Attached Storage (NAS), Storage Area Network (SAN), servers, and storage appliances.

Project Catapult is the technology behind Microsoft’s hyperscale acceleration fabric. The supercomputing substrate is built with the aim to accelerate the efforts in networking, security, cloud services and artificial intelligence.

Project Catapult combines an FPGA integrated into nearly every new Microsoft datacenter server. By exploiting the reconfigurable nature of FPGAs, at the server, the Catapult architecture delivers the efficiency and performance of custom hardware without the cost, complexity and risk of deploying fully customized ASICs into the datacenter. Moreover, the performance gain compared with CPUs is monumental and with less than 30% cost increase, and no more than 10% power increase.

Due to their programmable nature, FPGAs are an ideal for numerous markets. As the industry leader, Xilinx provides comprehensive solutions consisting of FPGA devices, advanced software, and configurable, ready-to-use IP cores for markets and applications such as:

The advancement in computing power and storage capability combined with substantial savings and efficiency introduced through FPGA technology mean the world of supercomputing is more accessible then ever.

Falcon Technologies International LLC has developed Century Archival product line, which is a cutting-edge technology with built in Gold or Platinum layers to ensure maximum security, longevity and protection for stored data.

Century Archival products are designed to secure data for hundreds of years – FTI’s Century Archival DVD product has been demonstrated in testing to be capable of storing data for up to 200 years, whereas the Century Archival CD product has demonstrated a longevity in excess of 400 years, making the Century Archival line the most durable and secure archival digital media product available in the market today.

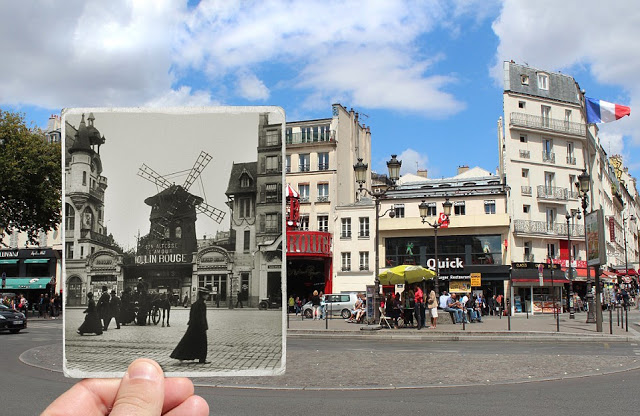

Imagine that you suddenly woke up and it’s autumn of 1916. What type of world would you witness around you? Can you just close your eyes, take a moment and imagine?

Exactly a century ago, telecommunications were in their infancy and still regarded as something from the world of semi-science fiction. Most wide-spread transport means were via railroad and maritime vessels, accountants and bankers carefully kept their records exclusively on paper and the world as a whole still seemed to be immense in size. There were of course ways of transferring the information through relatively fast avenues like telegraph: a message from Europe could be delivered to North America for only 15 minutes with the means of Trans-Atlantic Cable, but this is something that only the selected few can afford.

Who would ever dare to imagine that a hundred years later we will be sending control commands to a robotic rover, that operates on the surface of another planet, taking us same 15 minutes to do it? 15 minutes to get the signal inside the masterpiece of human engineering and artificial intelligence over the distance of 55 million kilometers. It is hard to picture such distance even today in 21st century, but, nonetheless, it is actually happening.

This small example of technical progress makes us think about the future and how it will look like. During last 100 years’ humanity managed to increase the speed of communication exponentially and it seems like this is directly linked to the overall pace of scientific growth.

Recently we started to hear the term “Internet of Things” more often. Some say that this is a phenomenon that will take humanity to the next level of development. At the same time, some have never even heard about it. And this is typical for the brand new concepts. Coming back to the “100 years ago” example, it would be fair to mention that a telephone wasn’t even taken seriously by many people at the very beginning. Some even were making jokes about “how it would look like if a gentlemen and a lady would keep entertaining themselves with wireless telephone boxes instead of enjoying company of each other while walking in the park”. Sounds hilarious, right?

So what is Internet of things? In 2013 the Global Standards Initiative on Internet of Things (IoT-GSI) defined the IoT as “the infrastructure of the information society.”

As you probably know, there are several distinguished stages of the societal world development: feudal society, which was dominant model in Middle Age, was replaced by the Industrial Society after the Industrial Revolution back in 18th century. Later on, in 20th century the the model got a significant push by overall technical progress, and eventually with the emergence on Informational and Communication Technologies we are now in transition period that is leading us into Post-Industrial Era – a stage of society that is very often called “Information Society”.

The infrastructure of this society will be created by the next generation technologies, that will be connected between each other by the means of Global Network of Internet. And this infrastructure will be there to change the ways of mass-production and global trade forever. Have a look at this short infographic video to understand how it already starts to work:

However, there are some challenges that are coming along this “Informational Revolution”, one of the biggest being data security and safety of communication. Nothing new under the sun, as they say: when the first commercial airlines were launched there was much concern on flight safety (which are still there, even though commercial aviation will soon celebrate 100 years anniversary).

Andrew and Ken Munro of Pen Test Partners (a UK-based IT security company) created a first virus targeted to hit one of the essential parts of any SMART-home technology: internal temperature control system. They later admitted that the malware was specially designed to demonstrate the weak points of the IoT concept. Results of their experiment were demonstrated in August during the Def Con IT conference.

For the experiment, they used one of the most popular models of the thermostat. Tierney and Munro discovered vulnerabilities on the device by means of which they have managed to remotely install malicious software. The hacked thermostat began to change the room temperature from minimum to maximum, and then display the request to transfer money to the account of intruders. Tierney and Munro said that they are planning to transfer all the information about the vulnerabilities they found to the manufacturer of the thermostat.

Internet of Things is shaping today and this process is irreversible. But it brings to the top of the list the data and connectivity security issues. Manufacturers should never underestimate these issues and pay maximum attention to the possible weak points of their products. It may be reached through careful and comprehensive QC/QA procedures.

Falcon Technologies International have never underestimated data security, and this is one of the basic values we put at the core of every single product we develop. Once the data is recorded on Century Archival disc, it is safe for centuries, and we give a 100% guarantee for that.

Smart cloud-based storage technologies such as Apple’s iCloud, Google’s Drive, Dropbox and many others available out there have become part of our daily activities, both in our personal and professional lives. It is certainly very convenient to have all your files stored somewhere “in the cloud” and accessible from al the devices connected to the Internet (well, literally any device – everything is hooked up to the web nowadays – right?) It is certainly a great technology that makes our life so easier, but in the euphoria of excitement we maybe forgot about one of the major issues with “clouds” – their security. The area where most of the consumer-oriented cloud-based storages is still very vulnerable.

On August 31, 2014, a collection of almost 500 private pictures of various celebrities, mostly women, were posted on the 4chan image board, and later disseminated by other users on websites and social networks. The images were believed to have been obtained via a breach of Apple’s cloud services suite iCloud, but it later turned out that the hackers could have taken advantage of a security issue in the iCloud API which allowed them to make unlimited attempts at guessing victims’ passwords. On September 20, 2014, a second batch of similar private photos of additional celebrities was leaked by hackers. Less than a week later, on September 26, a third batch was also leaked.

The leak prompted increased concern from analysts surrounding the privacy and security of cloud-based services such as iCloud—with a particular emphasis on their suitability to store sensitive, private information.

Apple reported that the leaked images were the result of compromised accounts, using “a very targeted attack on user names, passwords and security questions, a practice that has become all too common on the Internet”.

In October 2014, the FBI searched a house in Chicago and seized several computers, cell phones and storage drives after tracking the source of a hacking attack to an IP address linked to an individual named Emilio Herrera. A related search warrant application mentioned eight victims with initials A.S., C.H., H.S., J.M., O.W., A.K., E.B., and A.H., which supposedly points to stolen photos of Abigail Spencer, Christina Hendricks, Hope Solo, Janette McCurdy, Olivia Wilde, Anna Kendrick, Emily Browning and Amber Heard. According to law enforcement officials, Herrera is just one of several people under investigation and the FBI has carried out various searches across the US.

A more recent incident happened in the UK, where one of the major tabloids reported that it was offered private images featuring the Duchess of Cambridge’s sister Pippa Middleton. It was said that the images also feature Duchess’s children, Prince George (the future King of England) and Princess Charlotte.

The Police are investigating the case, their main lead being iCloud account hack. It is also said that the leak includes approximately 3000 private photographs that were stored on Pippa Middleton’s iCloud account.

As you can see from the facts and the events described above, cloud-based storage solutions are certainly not the most reliable data storage solutions. They are very flexible and accessible from any device, but it is important to keep in mind that sometimes they may be accessed by unauthorised parties, that may use sensitive and private information against its holders.

One of the reasons why offline data storage is still used today is that some information sources are containing data that should be stored offline due to security reasons. Private images, spreadsheets with secret data, classified investigation files and many other kinds of sensitive information will never be accessed by any hacker in the world if it is stored on optical disc that is locked down in the safe. There is no way it could be accessed, unless the disc is in physical possession of the person that wants to access the data. And physical access to the information is easier to control.

Data archiving has always been a challenge both for the enterprises and manufacturers of data storage solutions. The basic laws of thermodynamics tend to have the effect of deteriorating data storage capacity in the long run, so it is important to understand the needs of each individual data archiving project to be able to choose the right storage system based on the appropriate technology under the proper environmental conditions. That should be also combined with relevant migration and replication practices to improve the safety and accessibility for the extended periods of time.

The most common means of data archiving today are flash memory, hard disk drives, magnetic tape and optical discs. Data storage architects usually use one of these technologies, or their combinations, when designing their systems.

Let’s try and take a closer look at these technologies.

First consider flash memory in archiving. At the 2013 Flash Memory Summit Jason Taylor from Facebook, in a keynote speech, presented the idea of using really low endurance flash memory for a cold storage archive. According to Marty Czekalski of Seagate at the MSST conference, flash writing is best done at elevated temperatures while data retention and data disturb favor storage at lower temperatures. The JEDEC JESD218A endurance specification states that if flash power-off temperature is at 25 degrees C then retention is 101 weeks—that isn’t quite 2 years. So it appears conventional flash memory may not have good media archive life and should only be used for storing transitory data.

Hard disk drives are often used in active archives because the various hard disk drive arrays can be continually connected to the storage network, allowing relatively rapid access to content. Hard disk drive active archives can also be combined with flash memory to provide better overall system performance. However hard disk drives do not last forever – they can wear out with continued use and even if the power is turned off the data in the hard disk drive will eventually decay due to thermal erasure (again we run into the enemy of data retention, thermodynamics).

In practice, hard disk drive arrays have built in redundancy and data scrubbing to help retain data for a long period. It is probably good advice to assume that HDDs in an active archive will last only 3-5 years and will need to be replaced over time.

Less active archives where data is stored for longer periods of time will be interested in storage media that can retain the information stored on them for an extended period of time. There are two common digital storage media that are used for long term cold storage applications – magnetic tape and optical discs.

Let’s look at these two storage technologies and compare them for long term cold storage applications.

Magnetic tapes used for archiving come in half-inch tape cartridges. The popular formats used to day are the LTO format supported by the Ultrium LTO Program, the T10000 series tapes from Oracle/ StorageTek and the TS series enterprise tapes from IBM. Modern magnetic tapes have a storage life under low temperature/humidity storage conditions and low usage of several decades and currently native storage capacities per cartridges as high as 8.5 TB.

When not actively being written or read, magnetic tape cartridges can sit in a library system consuming no power. Digital magnetic tape is thus a good candidate for long-term data retention and has a long history of use in many industries for this application.

Optical storage has also been used for long-term data retention and environmental stress tests indicate that the latest generation of optical media should have an expected life-time of at least several decades. FalconMedia Century Archival discs are actually able to store data for hundreds of years, thanks to special gold and platinum reflective layers, that are used in their construction. At the Open Compute Project Summit in January 2014 Facebook, presented a 1 PB optical disc storage system prototype with 10,000 discs. When Facebook started the actual exploitation of the system it actually reduced company’s storage costs by 50% and the energy consumption by 80% compared to their previous HDD-based cold storage system.

Ken Wood from Hitachi Data Systems at the MSST Conference presented research results that proved hypothesis that the migration/remastering costs for 5 PB of content over 75 years is much less for an optical system with the media replaced every 50 years rather than more frequent tape and HDD replacement.

A lot of digital data has persistent value and so long term retention of that data is very important. In an Oracle talk at MSST they estimated that storage for archiving and retention is currently a $3B market, growing to over $7B by 2017. Several storage technologies can play a role in an archive system depending upon the level of activity expected in the archive. Flash memory can provide caching of frequently used or anticipated content to speed retrieval times while HDDs are often used for data that is relatively frequently accessed.

Magnetic tape and optical disks provide low cost, long-term inactive storage with additional latency for data access vs. HDDs due to the time to mount the media in a drive. Thus depending upon the access requirements for an archive it may be most effective to combine two or even three technologies to get the right balance of performance and storage costs. As the total content that we keep increases, these considerations will become more important to drive new generations of storage technologies geared toward protecting valuable content and bringing it to the future.

One of the world’s leading smartphone manufacturers recently got themselves in a tricky situation that is very hard to manage at the moment, both logistically and PR-wise.

Two weeks ago, Samsung had no other choice but to create one of the biggest global handset recalls in the world. This was caused by 35 registered cases of exploding phones, or phones catching fire during charging. Galaxy Note 7 was launched on August 19th, and as of today, nearly 2.5 million phones are to be returned to the manufacturer – a major drawback for the Korean technological giant, that severely undermines its effort to push its phone up the value chain.

It wouldn’t be such a big deal, but we all know about the so-called “dominoes effect”.

Other Samsung products, such as recently released Galaxy S7 Edge, and soon to be presented Galaxy Tab S3, are facing problems as well. Since all of them are assembled on the same factories as Note 7, consumers will no longer associate them with terms “trendy” or “reliable” (most likely, these terms will be substituted with “exploding batteries” and “poor quality” deep inside the consumers’ minds).

The US Federal Aviation Administration has already started discussions on the topic of whether to prohibit the in-flight usage of mobile devices. Samsung gets questioned every single day on whether the Note 8 model will be safe, there is always the chance that the Note 7 case will push FAA to prohibit the usage of mobile devices during flights.

It is truly regrettable what happened to Samsung; in the long run this failure can result in a major brand image decline with a further global sales drop, a mistake that will cost Korean giant a lot. This case brings us back to the production stage, one of the purposes of which is to avoid such negative outcomes via quality control and quality assurance.

Quality control emphasizes testing of products to uncover defects and reporting to management who make the decision to allow or deny a product release, whereas quality assurance attempts to improve and stabilize production (and associated processes) to avoid, or at least minimize, issues which may potentially lead to the defects in future.

Samsung’s battery failure is a good lesson to learn for everyone in the technology and consumer electronics market – it always better to spend more time and focus on quality control, rather then lose the track of things driven by the blind efforts to catch up with your competitors.

______________

DISCLAIMER: The above article is about Samsung case study which is used as an example of the importance of QC/QA procedures. It was published to stress out the importance of these processes. One of the core values of FTI is Quality and Reliability and we have used the Samsung Case to emphasize once again that FTI learns lessons from the other’s mistakes and it is one more argument in favour of proper QC and QA procedures which should be always on the top of any production company’s priority list.

People who are used to hooking headphones up to their smartphones could soon find themselves searching for a non-existent connector. Rumour had it for more than 6 months now, and finally it was confirmed to be true: Apple presented an all-new iPhone 7 last week, and guess what? Engineers from Apple’s home in Cupertino said “Fare thee well!” to the 3.5 mm audio jack connector.

Now, since Apple is undoubtedly the world’s leading smartphone manufacturer, all the other market players will follow this example and soon the era of wired headphones may be over (just like recent end of VHS tapes era).

However, the sad thing is, the headphone jack – is a very good connector. It’s a universal interface that can be still plugged into your smartphone, your tablet and computer, your TV, hi-fi, radio, Game Boy or console. And it has been used widely for decades, more or less replacing the larger 1/4-inch jacks (which dated from the 1870s, originally used for manual telephone exchange) since the 1960s for all but specialist applications, such as electric guitars and some more powerful amps.

As smartphones have become the primary music device for a whole generation and more, most headphones spend the majority of their time plugged into these pocket computers. But now the jack’s dominance is being contested.

Several smartphone manufacturers have started shipping handsets without 3.5 mm sockets even before Apple’s move with iPhone 7 last Wednesday. For example, Lenovo’s new modular Moto Z shrinks the headphone socket for a dongle that’s plugged into the relatively new USB-type C socket in the bottom. China’s LeEco also dumped the socket, while chip giant Intel is actively encouraging others to kill off the analogue 3.5mm socket in favour of USB-type C.

According to Android Authority Blog, moving from analogue to digital connector may be both positive and negative in certain aspects. Moreover, the article published on the news portal states that it is expected that both options will sit side by side in the market for the foreseeable future and it is still too early to say that 3.5 mm jack will be fully dumped as a technology.

Why would Apple and other smartphone manufacturers dump the handy, helpful, user-friendly headphone jack? There are several reasons. The Lightning port in the bottom of an iPhone is already capable of outputting audio, and is needed for power, so if one of the two has to go to save a little bit of space, the 3.5mm jack gets the boot.

Chief marketing officer of Jaybird (wireless headphones manufacturer), Rene Oehlerking is sure that the days of the analog headphone jack are over. He believes that this technology has always been like a ghost from the analogue past in the world of digital technology and it is exactly the same interface that used to be plugged into with famous Walkman players, that were first introduced back in 1979.

Even though there are different, sometimes even opposite opinions on the future of 3.5 mm audio jack interface, it is obvious that this technology will eventually reduce its presence on the mass market over the next 5 years. But there is absolutely no doubt, that it’s authority will stay untouched in music-recording and movie-production industries, where quality of sound monitoring and mastering is essential.

Very similar situation is with optical media. Realistically speaking, CDs and DVDs are gradually moving from mass-market to niche-industries, such as data archiving, sound and video production, etc. These industries still preserve commonly-considered “outdated” formats, since their reliability is under no question.

For example, video-production and music recording studios still tend to use high-end optical media, such as FalconMedia Premium Line, to store big volumes of sound and video materials. It is cheaper and safe, since optical media does not require any constant electricity supply and has a way more extended lifespan.

As a conclusion, it is not necessary to run after latest inventions and get the new technologies implemented immediately after their introduction. Sometimes old, but tried-and-true things happen to be way more reliable and safe.